What does it all mean?

Generative AI, particularly Large Language Models (LLMs), has revolutionized content creation. However, such models sometimes suffer from a phenomenon called hallucination. These are instances when LLMs generate content that is made up, incorrect, or unsupported. To understand the nuances of this problem, let’s explore some scenarios:

The first two are creative hallucinations that can be useful, depending on the application. The last one is a factual hallucination, which is unacceptable. We will discuss this kind of hallucination in the rest of the post.

Factuality refers to the quality of being based on generally accepted facts. For example, stating “Athens is the capital of Greece” is factual because it is widely agreed upon. However, establishing factuality becomes complex when credible sources disagree. Wikipedia gets around such problems by always linking a claimed fact with an external source. For example, consider the statement:

“In his book Crossfire, Jim Marrs gives accounts of several people who said they were intimidated by the FBI into altering facts about the assassination of JFK.”

The factuality of the statement “FBI altered facts about the assassination of JFK” is not addressed by Wikipedia editors. Instead, the factual statement is about the content of Marrs' book, something that can be easily checked by going directly to the source.

This leads us to a useful refinement of factuality: grounded factuality. Grounded factuality is when the output of a LLM is checked against a given context provided to the LLM.

Consider the following example: While Athens being the capital of Greece is a factually correct statement, from 1827 to 1834 the capital was the city of Nafplio. So, in a historical context about Greece at that time, stating that Athens was the capital in 1828 would have been a hallucination.

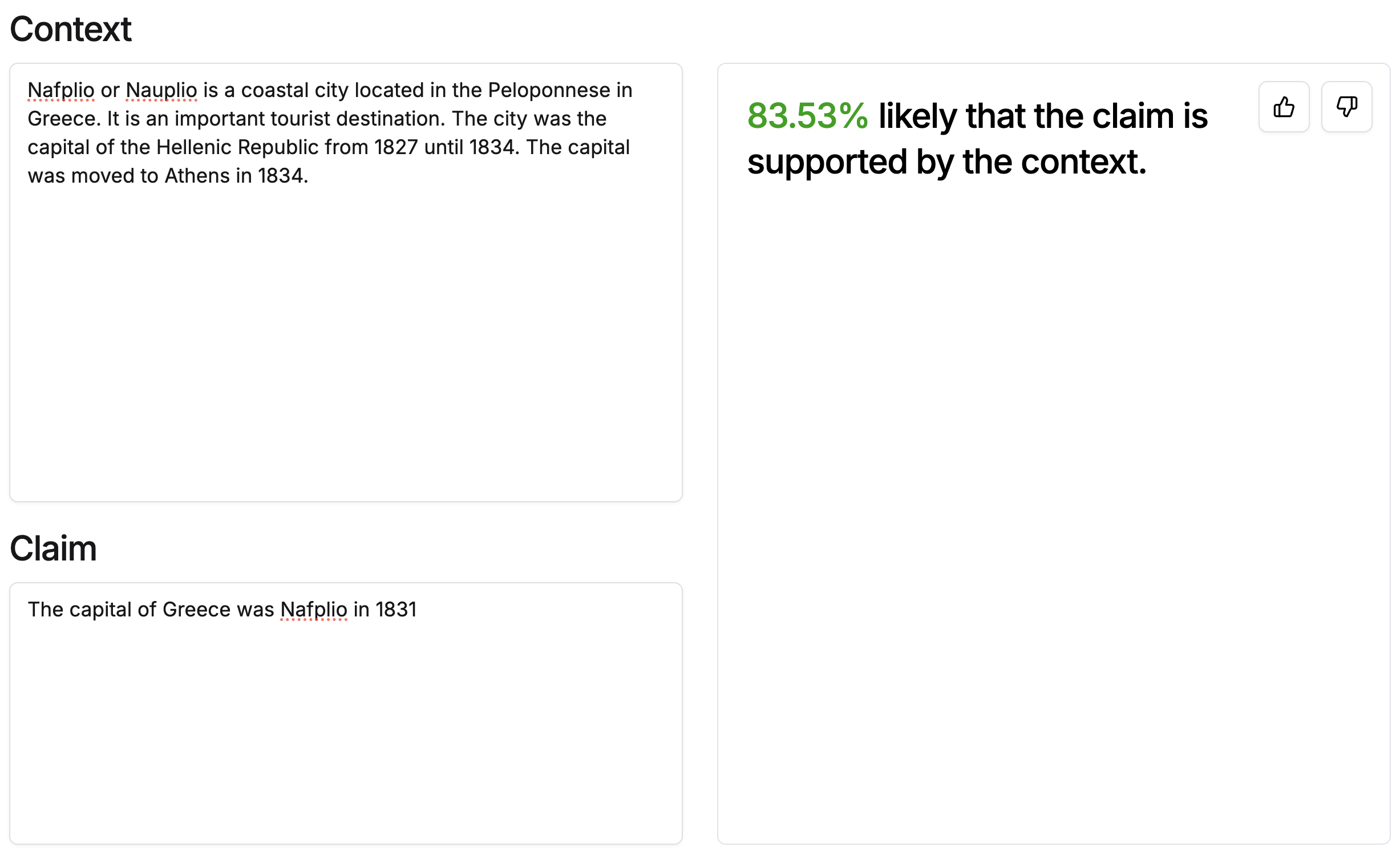

In grounded factuality, the source of truth is given in a context document. Then, the factuality of a claim is checked with respect to that given context. In the NLP and linguistics literature, this problem is also called textual entailment.At Bespoke Labs, we have built a small grounded factuality detector that tops the LLM AggreFact leaderboard. The model detects within milliseconds whether a given claim is factually grounded in a given context and can be tested at playground.bespokelabs.ai (HuggingFace link).

The Bespoke-Minicheck-7B model is lightweight and outperforms all big foundation models including GPT 4o and Mistral-Large 2 for this specialized task. Bespoke-Minicheck-7B is also available by API through our Console.